基础架构部文档

基础架构部文件格式标准参考

技术文档

mr_doc 接入ucenter 认证登录

loki日志收集

https证书与ssl/tls 加密

FTP 主动模式和被动模式的区别

Hadoop-windows10安装部署Hadoop2.7.3

JKS和PFX证书文件格式相互转换方法

KVM 基础操作

k8s nginx ingress日志收集到ELK并分析

Django基础

clash http代理 socks代理服务器搭建 配置

Ubuntu 22.04 安装 FFmpeg v7.0

ORM

AI MCP 介绍

Django 模板

Office正版化项目的个人体验和心得

重置jenkins构建历史

K8S实施方案

k8s的yaml文件语法

Docker的优势与虚拟机的区别

问题处理文档

HR推送数据问题处理报

Nginx从入门到放弃01-nginx基础安装

Nginx从入门到放弃02-Nginx基本命令和新建WEB站点

Nginx从入门到放弃03-Nginx调优

Nginx从入门到放弃04-Nginx的N种特别实用示例

JMeter教程

01-mariadb编译安装

02-mariadb二进制安装

Docker修改默认的存储路径

01-influxdb2时序数据库简介及安装

02-influxdb2时序数据库核心概念

03-influxdb2时序数据库flux查询语言

04-influxdb2--Python客户端influxdb-client

05-Spring boot 集成influxdb2

06-influxdb2其他知识

OA添加waf后相关问题的解决过程

排除java应用cpu使用率过高

exsi迁移文档

视频测试

阿里云产品试题

超融合服务器和传统服务器的区别

Serv-U问题集锦

文件夹共享操作手册

磁盘脱机处理方案

Office内存或磁盘空间不足处理方法

Cmd中ping不是内部或外部命令的解决方法

ELK 搭建文档

限制用户的远程桌面会话数量

Docker快速安装rocketmq、redis、zookeeper

超融合建设方案

git 入门

HR系统写入ES数据报错403

ELK搭建文档

KVM 安装和基础使用文档

helm 安装 rancher

访问共享提示禁用当前用户解决方法

K8S StorageClass搭建

KVM 扩展磁盘

借助sasl构建基于AD用户验证的SVN服务器

fastdfs编译安装并迁移数据

关闭系统保护的必要性

SCF 前置机部署

阿里云OSS学习文档

阿里云学习文档-VPC

(k8s踩坑)namespace无法删除

rancher-helm安装

zookeeper集群安装

批量替换K8s secrets 中某个特定域名的tls证书

kibana 批量创建索引模式

centos7 恢复Yum使用

ACP云计算部分知识点总结

Loki 日志系统搭建文档

自动更新k8s集群中所有名称空间中特定证书

AI分享

(AI)函数调用与MCP调用的区别

安装戴尔DELL Optilex 7040 USB驱动时提示无法定位程序输入点 kernel32\.dll

新华三服务器EXSI 显卡直通

conda

双流本地k8s搭建

通义灵码介绍

本文档使用「觅思文档专业版」发布

-

+

首页

K8S实施方案

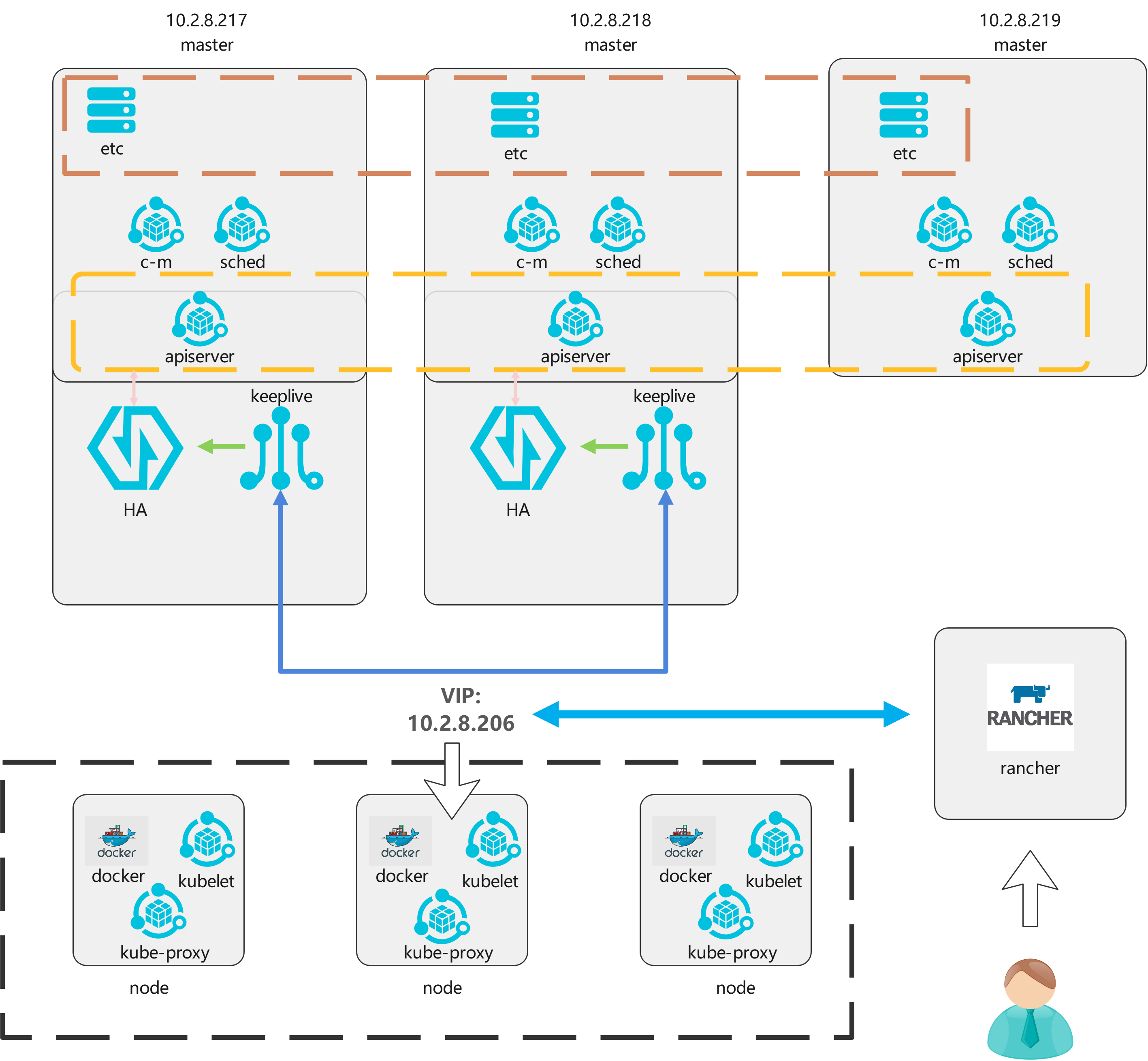

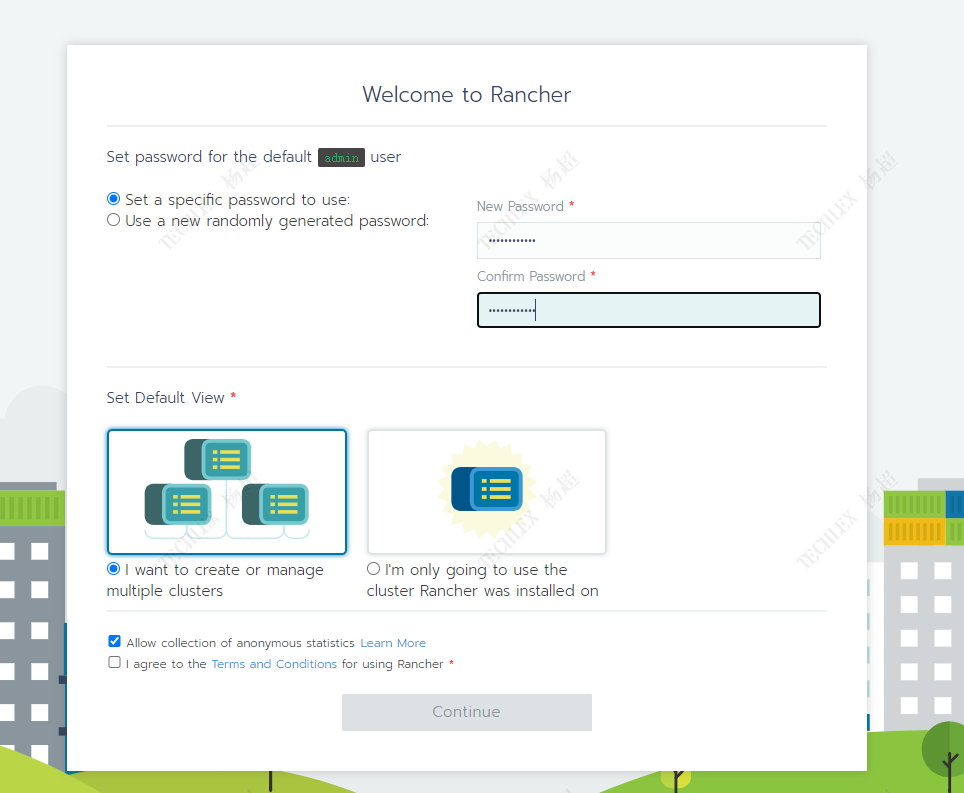

# 1.服务器规划: ## 1.1 服务器选型 | 节点类型 | 配置 | 操作系统 | | --- | --- | --- | | master | (2c/4g / 100g )x3 | CentOS Linux release 7.9.2009 (Core) | | worker | (8c/32g / 200g)x3 | CentOS Linux release 7.9.2009 (Core) | | nfs | (2c/4g / 200g)x1 | CentOS Linux release 7.9.2009 (Core) | | rancher | (2c/4g / 100g)x1 | CentOS Linux release 7.9.2009 (Core) | | 镜像仓库 | (2c/4g / 200g)x1 | CentOS Linux release 7.9.2009 (Core | ## 1.2服务器架构图  # 2.k8s高可用架构构建 在正式环境中应确保Master的高可用,并启用安全访问机制,至少包括一下几个方面: - Master的kube-apiserver、kube-controller-manager和kube-scheduler服务至少以3个节点的多实例方式部署。 - Master启用基于CA认证的HTTPS安全机制 - etcd至少以3个节点的集群模式部署 - etcd集群启用基于CA认证的HTTPS安全机制 ## 2.1 基础环境准备 ### 1. 主机名修改 ```bash hostnamectl set-hostname <hostname> ``` ### 2. hosts文件配置 ```bash cat >> /etc/hosts << EOF IP1 hostname1 IP2 hostname2 IP3 hostname3 IP4 hostname4 EOF ``` ### 3. 防火墙关闭 ```bash systemctl stop firewalld && systemctl disable firewalld ``` ### 4. selinux关闭 ```bash # 临时关闭 # setenforce 0 # 永久关闭 sed -i 's/enforcing/disabled/' /etc/selinux/config ``` ### 5. swap分区关闭 ```bash # swap临时关闭 swapoff -a # 禁用swap开机自启动 sed -ri 's/.*swap.*/#&/' /etc/fstab ``` ### 6. 时钟同步 ```bash yum install ntpdate -y # 时钟同步 ntpdate time.windows.com # 定时任务 vim /etc/crontab 0 * * * * ntpdate time.windows.com ``` ### 7. docker安装 ```bash # 清理老版本docker sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine # 安装依赖 sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2 # 更换阿里源(仅对centOS7有效) sudo yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo # yum安装docker-ce sudo yum install docker-ce docker-ce-cli containerd.io # 启动并设置开机自启 sudo systemctl enable docker && sudo systemctl start docker ``` ## 2.2 etc高可用 ### 1. 创建CA根证书 ```bash #所有的master共用共一个CA根证书 #创建的ca.key和ca.crt文件保存在/etc/kubernetes/pki目录下 mkdir -p /etc/kubernetes/pki openssl genrsa -out ca.key 2048 openssl req -x509 -new -nodes -key ca.key -subj "/CN=10.2.8.217" -days 36500 -out ca.crt ``` ### 2. 下载etcd二进制文件并配置systemd服务 ```bash # 第一步:从github官网下载etcd二进制文件 ETCD_VER=v3.4.13 # choose either URL GOOGLE_URL=https://storage.googleapis.com/etcd GITHUB_URL=https://github.com/etcd-io/etcd/releases/download DOWNLOAD_URL=${GOOGLE_URL} rm -f /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz rm -rf /tmp/etcd-download-test && mkdir -p /tmp/etcd-download-test curl -L ${DOWNLOAD_URL}/${ETCD_VER}/etcd-${ETCD_VER}-linux-amd64.tar.gz -o /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz tar xzvf /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz -C /tmp/etcd-download-test --strip-components=1 rm -f /tmp/etcd-${ETCD_VER}-linux-amd64.tar.gz /tmp/etcd-download-test/etcd --version /tmp/etcd-download-test/etcdctl version # 第二步:将etcd和etcdctl复制到/usr/bin目录下 cp etcd etcdctl /usr/bin/ # 第三步:将etcd部署为一个systemd服务 # /usr/lib/systemd/system/etcd.service [Unit] Description=etcd key-value store Documentation=https://github.com/etcd-io/etcd After=network.target [Service] EnvironmentFile=/etc/etcd/etcd.conf ExecStart=/usr/bin/etcd Restart=always [Install] WantedBy=multi-user.target ``` ### 3. 创建etcd的CA证书 ```bash #所有的etcd共用同一个CA证书 mkdir -p /etc/etcd/pki cd /etc/etcd/pki # 第一步:配置etcd_ssl.cnf [ req ] req_extensions = v3_req distinguished_name = req_distinguished_name [ req_distinguished_name ] [ v3_req ] basicConstraints = CA:FALSE keyUsage = nonRepudiation, digitalSignature, keyEncipherment subjectAltName = @alt_names [ alt_names ] IP.1 = 192.168.18.3 IP.2 = 192.168.18.4 IP.3 = 192.168.18.5 # 第二步:生成etcd_server证书并保存到/etc/etcd/pki目录下 openssl genrsa -out etcd_server.key 2048 openssl req -new -key etcd_server.key -config etcd_ssl.cnf -subj "/CN=etcd-server" -out etcd_server.csr openssl x509 -req -in etcd_server.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_server.crt #cp etcd_server.key etcd_server.crt /etc/etcd/pki/ # 第三步:生成etcd_key证书并保存到/etc/etcd/pki目录下 openssl genrsa -out etcd_client.key 2048 openssl req -new -key etcd_client.key -config etcd_ssl.cnf -subj "/CN=etcd-client" -out etcd_client.csr openssl x509 -req -in etcd_client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile etcd_ssl.cnf -out etcd_client.crt #cp etcd_client.key etcd_client.crt /etc/etcd/pki/ ``` ### 4. etcd配置参数修改 ```bash # /etc/etcd/etcd.conf - node 1 ETCD_NAME=etcd1 ETCD_DATA_DIR=/etc/etcd/data ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_CLIENT_CERT_AUTH=true ETCD_LISTEN_CLIENT_URLS=https://10.2.8.217:2379 ETCD_ADVERTISE_CLIENT_URLS=https://10.2.8.217:2379 ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_LISTEN_PEER_URLS=https://10.2.8.217:2380 ETCD_INITIAL_ADVERTISE_PEER_URLS=https://10.2.8.217:2380 ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster ETCD_INITIAL_CLUSTER="etcd1=https://10.2.8.217:2380,etcd2=https://10.2.8.218:2380,etcd3=https://10.2.8.219:2380" ETCD_INITIAL_CLUSTER_STATE=new # /etc/etcd/etcd.conf - node 2 ETCD_NAME=etcd2 ETCD_DATA_DIR=/etc/etcd/data ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_CLIENT_CERT_AUTH=true ETCD_LISTEN_CLIENT_URLS=https://10.2.8.218:2379 ETCD_ADVERTISE_CLIENT_URLS=https://10.2.8.218:2379 ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_LISTEN_PEER_URLS=https://10.2.8.218:2380 ETCD_INITIAL_ADVERTISE_PEER_URLS=https://10.2.8.218:2380 ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster ETCD_INITIAL_CLUSTER="etcd1=https://10.2.8.217:2380,etcd2=https://10.2.8.218:2380,etcd3=https://10.2.8.219:2380" ETCD_INITIAL_CLUSTER_STATE=new # /etc/etcd/etcd.conf - node 3 ETCD_NAME=etcd3 ETCD_DATA_DIR=/etc/etcd/data ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_CLIENT_CERT_AUTH=true ETCD_LISTEN_CLIENT_URLS=https://10.2.8.219:2379 ETCD_ADVERTISE_CLIENT_URLS=https://10.2.8.219:2379 ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt ETCD_LISTEN_PEER_URLS=https://10.2.8.219:2380 ETCD_INITIAL_ADVERTISE_PEER_URLS=https://10.2.8.219:2380 ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster ETCD_INITIAL_CLUSTER="etcd1=https://10.2.8.217:2380,etcd2=https://10.2.8.218:2380,etcd3=https://10.2.8.219:2380" ETCD_INITIAL_CLUSTER_STATE=new ``` ### 5. 启动etcd集群 ```bash systemctl restart etcd && systemctl enable etcd #测试etcd集群状态 etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints=https://10.2.8.217:2379,https://10.2.8.218:2379,https://10.2.8.219:2379 endpoint health ``` ## 2.3 Master高可用集群 ### 1. 下载kubernetes服务二进制文件 ```bash wget https://dl.k8s.io/v1.19.0/kubernetes.tar.gz tar xf kubernetes.tar.gz cd kubernetes/cluster ./get-kube-binaries.sh cd kubernetes/server/ tar -xf kubernetes-server-linux-amd64.tar.gz cd /kubernetes/server/kubernetes/server/bin # 将可执行文件复制到/usr/bin目录下 cp apiextensions-apiserver kubeadm kube-aggregator kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler mounter /usr/bin ``` ### 2. 部署kube-apiserver服务 ```bash # 第一步:准备master_ssl.cnf # master_ssl.cnf [req] req_extensions = v3_req distinguished_name = req_distinguished_name [req_distinguished_name] [ v3_req ] basicConstraints = CA:FALSE keyUsage = nonRepudiation, digitalSignature, keyEncipherment subjectAltName = @alt_names [alt_names] DNS.1 = kubernetes DNS.2 = kubernetes.default DNS.3 = kubernetes.default.svc DNS.4 = kubernetes.default.svc.cluster.local DNS.5 = k8s-1 DNS.6 = k8s-2 DNS.7 = k8s-3 IP.1 = 169.169.0.1 IP.2 = 10.2.8.217 IP.3 = 10.2.8.218 IP.4 = 10.2.8.219 IP.5 = 10.2.8.206 # DNS主机名,例如k8s-1、k8s-2、k8s-3 # IP地址10.2.8.217、10.2.8.218、10.2.8.219负载均衡ip地址10.2.8.216 # 第二步:创建kube-apiserver的服务端CA证书,并保存到/etc/kubernetes/pki目录下: openssl genrsa -out apiserver.key 2048 openssl req -new -key apiserver.key -config master_ssl.cnf -subj "/CN=10.2.8.217" -out apiserver.csr openssl x509 -req -in apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile master_ssl.cnf -out apiserver.crt # 第三步:为kube-apiserver创建systemd管理 # /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/apiserver ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS Restart=always [Install] WantedBy=multi-user.target # 第四步:配置文件/etc/kubernetes/apiserver # /etc/kubernetes/apiserver KUBE_API_ARGS="--insecure-port=0 \ --secure-port=6443 \ --tls-cert-file=/etc/kubernetes/pki/apiserver.crt \ --tls-private-key-file=/etc/kubernetes/pki/apiserver.key \ --client-ca-file=/etc/kubernetes/pki/ca.crt \ --apiserver-count=3 --endpoint-reconciler-type=master-count \ --etcd-servers=https://10.2.8.217:2379,https://10.2.8.218:2379,https://10.2.8.219:2379 \ --etcd-cafile=/etc/kubernetes/pki/ca.crt \ --etcd-certfile=/etc/etcd/pki/etcd_client.crt \ --etcd-keyfile=/etc/etcd/pki/etcd_client.key \ --service-cluster-ip-range=169.169.0.0/16 \ --service-node-port-range=30000-32767 \ --allow-privileged=true \ --logtostderr=false --log-dir=/var/log/kubernetes --v=0" # 第五步:启动kube-apiserver服务并设为开机自启动 systemctl start kube-apiserver && systemctl enable kube-apiserver ``` ### 3. 创建客户端CA证书 ```bash # 为kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务连接kube-apiserver创建 # 客户端CA证书 openssl genrsa -out client.key 2048 openssl req -new -key client.key -subj "/CN=admin" -out client.csr openssl x509 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt -days 36500 # 将客户端证书保存到/etc/kubernetes/pki目录下 cp client.key client.crt /etc/kubernetes/pki ``` ### 4. 创建客户端链接kube-apiserver服务所需的kubeconfig配置文件 ```bash # 创建配置文件/etc/kubernetes/kubeconfig # /etc/kubernetes/kubeconfig apiVersion: v1 kind: Config clusters: - name: default cluster: server: https://10.7.7.99:9443 certificate-authority: /etc/kubernetes/pki/ca.crt users: - name: admin user: client-certificate: /etc/kubernetes/pki/client.crt client-key: /etc/kubernetes/pki/client.key contexts: - context: cluster: default user: admin name: default current-context: default ``` ### 5. 部署kube-controller-manager服务 ```bash # 第一步:为kube-controller-manager服务创建systemd管理 # /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/controller-manager ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS Restart=always [Install] WantedBy=multi-user.target # 第二步:创建配置文件/etc/kubernetes/controller-manager # /etc/kubernetes/controller-manager KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \ --leader-elect=true \ --service-cluster-ip-range=169.169.0.0/16 \ --service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \ --root-ca-file=/etc/kubernetes/pki/ca.crt \ --log-dir=/var/log/kubernetes --logtostderr=false --v=0" # 第三步:启动kube-controller-manager并配置开机自启 systemctl start kube-controller-manager && systemctl enable kube-controller-manager ``` ### 6. 部署kube-scheduler服务 ```bash # 第一步:为kube-scheduler创建systemd管理 # /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/etc/kubernetes/scheduler ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS Restart=always [Install] WantedBy=multi-user.target # 第二步:创建配置文件/etc/kubernetes/scheduler # /etc/kubernetes/scheduler KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \ --leader-elect=true \ --logtostderr=false --log-dir=/var/log/kubernetes --v=0" # 第三步:启动kube-scheduler并配置开机自启 systemctl start kube-scheduler && systemctl enable kube-scheduler ``` ### 7. 使用HAProxy和keepalived部署高可用负载均衡器 需要在两台机器部署HAProxy+keepalived服务 ```bash # 第一步:配置haproxy.cnf文件 # haproxy.cfg global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4096 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 frontend kube-apiserver mode tcp bind *:9443 option tcplog default_backend kube-apiserver listen stats mode http bind *:8888 stats auth admin:password stats refresh 5s stats realm HAProxy\ Statistics stats uri /stats log 127.0.0.1 local3 err backend kube-apiserver mode tcp balance roundrobin server k8s-master1 10.2.8.217:6443 check server k8s-master2 10.2.8.218:6443 check server k8s-master3 10.2.8.219:6443 check # 第二步:启动haproxy docker run -d --name k8s-haproxy \ --net=host \ --restart=always \ -v ${PWD}/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro \ haproxytech/haproxy-debian:2.3 # 第三步:登录验证haproxy http://10.2.8.217:8888/stats # 第四步:配置keepalived master1 # keepalived.conf - master 1 ! Configuration File for keepalived global_defs { router_id LVS_1 } vrrp_script checkhaproxy { script "/usr/bin/check-haproxy.sh" interval 2 weight -30 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 virtual_ipaddress { 10.2.8.206/24 dev ens192 } authentication { auth_type PASS auth_pass password } track_script { checkhaproxy } } # 第五步:配置keepalived master2 # keepalived.conf - master 2 ! Configuration File for keepalived global_defs { router_id LVS_2 } vrrp_script checkhaproxy { script "/usr/bin/check-haproxy.sh" interval 2 weight -30 } vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 51 priority 100 advert_int 1 virtual_ipaddress { 10.2.8.206/24 dev ens192 } authentication { auth_type PASS auth_pass password } track_script { checkhaproxy } } # 第六步:创建check-proxy.sh #!/bin/bash count=`netstat -apn | grep 9443 | wc -l` if [ $count -gt 0 ]; then exit 0 else exit 1 fi # 第七步:启动keepalived docker run -d --name k8s-keepalived \ --restart=always \ --net=host \ --cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW \ -v ${PWD}/keepalived.conf:/container/service/keepalived/assets/keepalived.conf \ -v ${PWD}/check-haproxy.sh:/usr/bin/check-haproxy.sh \ osixia/keepalived:2.0.20 --copy-service # 第八步:检查高可用 curl -v -k https://10.2.8.206:9443 ``` ## 2.4 部署node ### 1.下载kubelet和kube-proxy二进制可执行文件 ``` wget https://dl.k8s.io/v1.19.0/kubernetes.tar.gz tar xf kubernetes.tar.gz cd kubernetes/cluster ./get-kube-binaries.sh cd kubernetes/server/ tar -xf kubernetes-server-linux-amd64.tar.gz cd /kubernetes/server/kubernetes/server/bin # 将可执行文件复制到/usr/bin目录下 cp kubelet kube-proxy /usr/bin/ ``` ### 2. 部署kubelet服务 ```bash # 第一步:为kublet创建systemd管理 # /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/kubernetes/kubernetes After=docker.target [Service] EnvironmentFile=/etc/kubernetes/kubelet ExecStart=/usr/bin/kubelet $KUBELET_ARGS Restart=always [Install] WantedBy=multi-user.target # 第二步:创建配置文件/etc/kubernetes/kubelet KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --config=/etc/kubernetes/kubelet.config \ --hostname-override=192.168.18.3 \ --network-plugin=cni \ --logtostderr=false --log-dir=/var/log/kubernetes --v=0" # 第三步:创建配置文件/etc/kubernetes/kubelet.config # /etc/kubernetes/kubelet.config kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 cgroupDriver: cgroupfs clusterDNS: ["169.169.0.100"] clusterDomain: cluster.local authentication: anonymous: enabled: true # 第四步:启动kubelet并开机自启 systemctl start kubelet && systemctl enable kubelet ``` ### 3. 部署kube-proxy服务 ```bash # 第一步:为kube-proxy创建systemd管理 # /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] EnvironmentFile=/etc/kubernetes/proxy ExecStart=/usr/bin/kube-proxy $KUBE_PROXY_ARGS Restart=always [Install] WantedBy=multi-user.target # 第二步:创建配置文件/etc/kubernetes/proxy # /etc/kubernetes/proxy KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \ --hostname-override=192.168.18.3 \ --proxy-mode=iptables \ --logtostderr=false --log-dir=/var/log/kubernetes --v=0" # 第三步:启动kube-proxy并开机自启 systemctl start kube-proxy && systemctl enable kube-proxy ``` ### 3. 在Master上通过kubectl验证node信息 ```bash # 查看node状态 kubectl --kubeconfig=/etc/kubernetes/kubeconfig get pods # 如果node状态为not ready,则执行以下命令 kubectl --kubeconfig=/etc/kubernetes/kubeconfig apply -f "https://docs.projectcalico.org/manifests/calico.yaml" # 如果拉取镜像k8s.gcr.io/pause:3.2失败,可以拉取阿里镜像到本地之后,重命名 ``` # 3.CI/CD方案选择 # 4.镜像仓库Harbor 2.3安装 ## 4.1docker 安装 ### 1.卸载旧版本的docker ``` sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine ``` ### 2.安装repo源 ``` sudo yum install -y yum-utils sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum repolist #刷新yum源 ``` ### 3.安装docker 安装最新版的docker: ``` sudo yum install docker-ce docker-ce-cli containerd.io ``` 安装制定版docker: ``` yum list docker-ce --showduplicates | sort -r sudo yum install docker-ce-<VERSION_STRING> docker-ce-cli-<VERSION_STRING> containerd.io ``` ### 4..迁移docker到数据盘 ``` mkdir -p /tqls_system/ mv /var/lib/docker /tqls_system/ ln -s /tqls_system/docker /var/lib/docker ``` ### 5.启动docker并设置为开机自启动 ``` systemctl start docker systemctl enable docker ``` ### 6.检查docker是否正确安装 ``` sudo docker run hello-world ``` ## 4.2下载docker harbor ### 1.下载docker-compose ``` sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /tqls_system/docker-compose ``` ### 2.添加可执行权限 ``` chmod +x docker-compose ``` ### 3.创建软链接 ``` ln -s /tqls_system/docker-compose /usr/bin/docker-compose ``` ### 4.检查docker-compose是否安装正确 ``` docker-compose --version ``` docker-compose version 1.29.2, build 5becea4c ## 4.3安装docker harbor ### 1.下载离线安装包 下载Harbor安装包:[https://github.com/goharbor/harbor/releases](https://github.com/goharbor/harbor/releases) ``` wget https://github.com/goharbor/harbor/releases/download/v1.10.1/harbor-offline-installer-v1.10.1.tgz ``` 解压离线安装包 ``` tar -zxvf harbor-offline-installer-v1.10.1.tgz -C /tqls_system/ #使用tar命令解压到/tqls_system目录下 ``` ### 2.配置harbor ``` cd /tqls_system/harbor #进入到harbor目录 vim harbor.yml #编辑harbor的配置文件 hostname: 10.2.8.214 #修改harbor的启动ip,这里需要依据系统ip设置 http: port: 80 #harbor的端口,有两个端口,http协议(80)和https协议(443) https: port: 443 certificate: /tqls_system/harbor_ssl/10.2.8.214.crt #证书和密钥 private_key: /tqls_system/harbor_ssl/10.2.8.214.key harbor_admin_password: Harbor12345 #修改harbor的admin用户的密码 database: password: root123 data_volume: /tqls_system/data # 修改harbor存储位置 ``` ### 3.安装harbor 在解压后的harbor目录执行下面语句 ``` ./prepare #配置Harbor ./install.sh #安装Harbor ``` ## 4.4配置HTTPS证书 **在生产环境,应该从CA机构获取证书。在测试或者开发环境可以自己生成证书** **(以下内容为自己生成证书)** ### 1.创建CA证书 创建证书存储目录 ``` mkdir -p /tqls_system/harbor_ssl/ ``` 生成CA证书私钥 ``` openssl genrsa -out ca.key 4096 ``` 生成CA证书 ``` openssl req -x509 -new -nodes -sha512 -days 3650 \ -subj "/C=CN/ST=Sichuan/L=Chengdu/O=example/OU=Personal/CN=10.2.8.214"\ -key ca.key \ -out ca.crt ``` ### 2.生成服务器证书 生成服务器证书私钥 ``` openssl genrsa -out 192.168.1.105.key 4096 ``` 生成服务器证书 ``` openssl req -sha512 -new \ -subj "/C=CN/ST=Sichuan/L=Chengdu/O=example/OU=Personal/CN=10.2.8.214" \ -key 10.2.8.214.key \ -out 10.2.8.214.csr ``` ### 3.生成 x509 v3扩展文件 这里不能按照官网的安装,官网使用的是域名。 ``` cat > v3.ext <<-EOF authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = 10.2.8.214 EOF ``` ### 4.向Harbor和Docker提供证书 harbor.yml配置文件中,certificate和private_key文件路径在/tqls_system/harbor_ssl/下,所以要确保生成的服务器证书放在这个目录下。 ### 5.向docker提供证书 将.crt文件转换为.cert文件,供docker使用 ``` openssl x509 -inform PEM -in yourdomain.com.crt -out yourdomain.com.cert ``` ``` mkdir -p /etc/docker/certs.d/10.2.8.214/ cp 10.2.8.214.cert /etc/docker/certs.d/10.2.8.214/ cp 10.2.8.2145.key /etc/docker/certs.d/10.2.8.214/ cp ca.crt /etc/docker/certs.d/10.2.8.214/ ``` ### 6.重启docker ``` systemctl restart docker ``` ## 4.5设置Harbor开机启动 每当服务器重启或docker服务器重启时,细心的朋友可能发现harbor没有启动,所以这边我们配置一下harbor的开机自启。 使用vim编辑器编辑配置文件vim /lib/systemd/system/harbor.service并向文件中写入 ``` [Unit] Description=Harbor After=docker.service systemd-networkd.service systemd-resolved.service Requires=docker.service Documentation=http://github.com/vmware/harbor [Service] Type=simple Restart=on-failure RestartSec=5 #需要注意harbor的安装位置 ExecStart=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up ExecStop=/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml down [Install] WantedBy=multi-user.target ``` ``` systemctl enable harbor #设置harbor开机自启 systemctl start harbor #启动harbor ``` ## 4.6验证和测试 ### 1.浏览器使用https登录 根据在harbor.yml文件中配置的端口与IP地址(或域名)进行访问 ### 2.测试docker能否正常登录 使用10.2.8.215服务器登录Harbor ``` docker login 10.2.8.214 ``` 报错: ``` Error response from daemon: Get https://10.2.4.215/v2/: x509: certificate signed by unknown authority ``` ### 3.制作的ca证书添加到信任(因为是自签名证书) 将ca.crt拷贝至10.2.8.214主机 ``` mkdir –p /etc/docker/certs.d/10.2.8.214 cp ca.crt /etc/docker/certs.d/10.2.8.214/ca.crt ``` 出现:Login Succeeded,表示登录成功 ## 4.7推送和拉取镜像 ### 1.标记镜像 ``` docker tag SOURCE_IMAGE[:TAG] 192.168.1.105/项目名/IMAGE[:TAG] ``` ### 2.推送镜像到Harbor ``` docker push 192.168.1.105/项目名/IMAGE[:TAG] ``` ### 3.从Harbor中拉取镜像 ``` docker pull 192.168.1.105/项目名/IMAGE[:TAG] ``` # 5.Rancher安装 安装docker后 ```shell docker run -d --privileged --restart=unless-stopped -p 80:80 -p 443:443 \ -v /tqls_system/rancher_home/rancher:/var/lib/rancher \ -v /datatqls_system/rancher_home/auditlog:/var/log/auditlog \ --name rancher rancher/rancher:v2.5.7 ``` 登录[https://10.7.7.106/](https://10.7.7.106/)

杨超

2022年5月10日 09:29

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

Word文件

PDF文档

PDF文档(打印)

分享

链接

类型

密码

更新密码

有效期