网关

网关运行分析报告

网关运行分析报告 - 2025-02-15

网关运行分析报告 - 2025-02-22

网关运行分析报告 - 2025-02-28

1.shenyu网关内外网使用

2.shenyu网关的具体使用

记一次网关线上问题之icsp访问ERP

再次思考多套ak/sk同时访问同一资源路径问题

Techlex网关 - 接入指南

网关接入说明补充

网关分配各系统命名

网关BUG及二开

网关管理端访问地址

修改requestBody与responseBody

shenyu工程理解

获取requestBody异步问题

shenyu工程部署

排查sign插件报错500问题

shenyu数据结构设计

shenyu网关请求过程

shenyu自定义插件

记一次网关线上问题----网关无法对外提供服务

网关插件更新报错问题

网关中grayTag的使用

Exceeded limit on max bytes to buffer : 262144

网关中Divide插件中Selector中Handler中配置丢失问题

网关请求下游系统时长记录

通用测试:获取网关的sign值

网关分发主数据设想方案

铁骑主数据分发机制完善

27.GTMS&ITMS与gateway的关系

28.ZPI与gateway的关系

26.网关验签场景

29.PC端空值,服务端正常请求

30.网关requestMaxSize值

31.跨系统跨语言日志链路追踪

32.网关异步分发讨论

33.网关LoggingConsole丢失日志排查

34.网关升级shenyu-admin

35.网关异步分发插件 - 接入文档

本文档使用「觅思文档专业版」发布

-

+

首页

网关中Divide插件中Selector中Handler中配置丢失问题

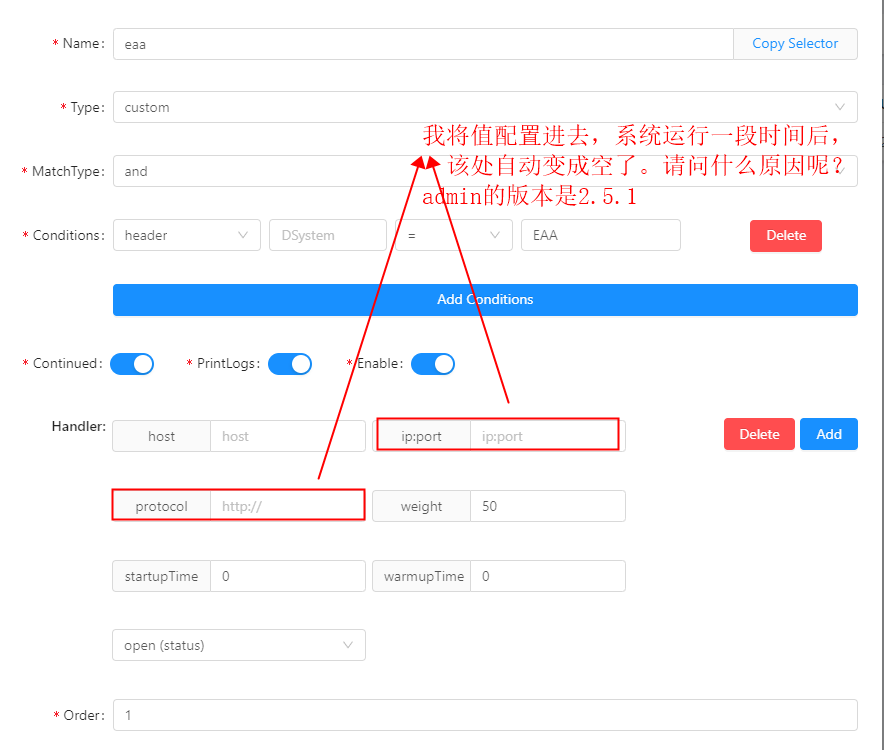

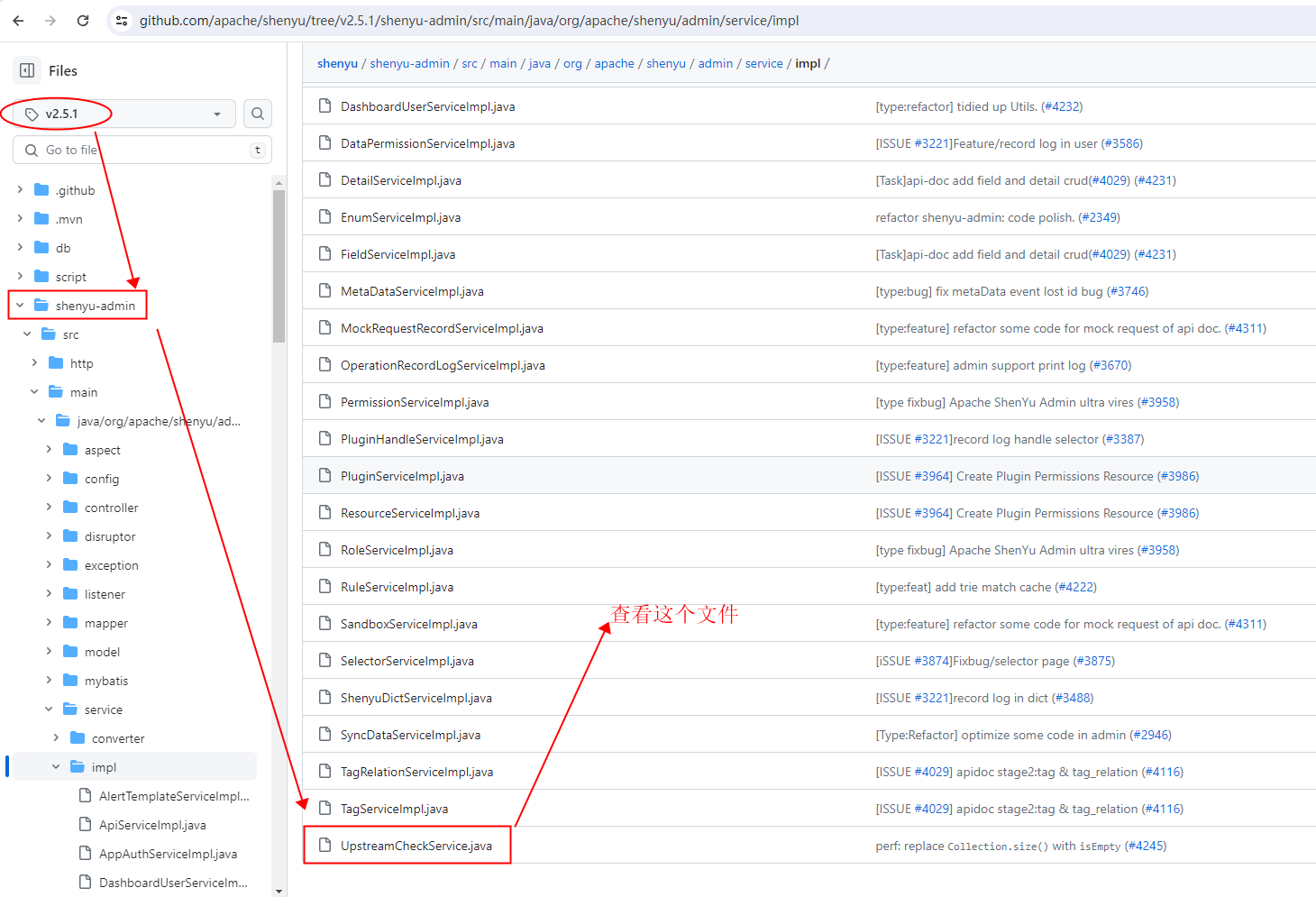

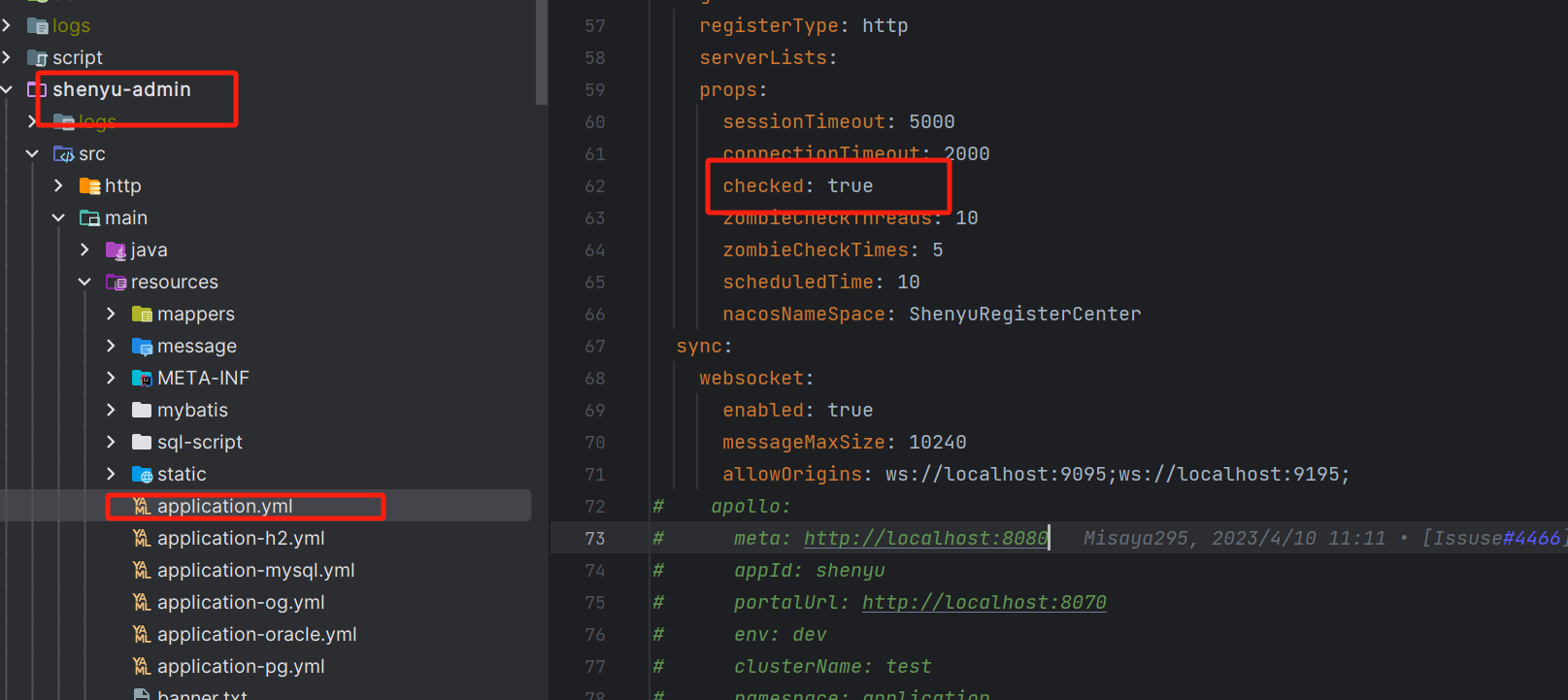

## 1.现象背景 生产环境屡次出现Divide插件中Selector中Handler中配置丢失问题,详见如下图  发现ip:port中的域名都是访问量比较大的情况。 一旦这个服务在某个时间段内访问量比较大,这个地方的值就会变成空。 ## 2.问题排查 这个问题着实不好复现,需要压测某个服务。且压测的时候还需要保证下游服务的并发高于网关。 直接到官网找答案,git上Issues中没有找到这个问题,接着询问官网支持人员(shenyu gateway这点服务非常好,时刻都有官方的技术人员帮解决问题),给了个类,让我们去看下,具体如下  将如下的 shenyu.register.props.checked 配置改成false  ## 3.源码分析 直接贴出源码 ``` /* * Licensed to the Apache Software Foundation (ASF) under one or more * contributor license agreements. See the NOTICE file distributed with * this work for additional information regarding copyright ownership. * The ASF licenses this file to You under the Apache License, Version 2.0 * (the "License"); you may not use this file except in compliance with * the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package org.apache.shenyu.admin.service.impl; import com.google.common.collect.Lists; import com.google.common.collect.Maps; import com.google.common.collect.Sets; import org.apache.commons.collections4.CollectionUtils; import org.apache.commons.collections4.ListUtils; import org.apache.commons.lang3.StringUtils; import org.apache.commons.lang3.math.NumberUtils; import org.apache.shenyu.admin.listener.DataChangedEvent; import org.apache.shenyu.admin.mapper.PluginMapper; import org.apache.shenyu.admin.mapper.SelectorConditionMapper; import org.apache.shenyu.admin.mapper.SelectorMapper; import org.apache.shenyu.admin.model.entity.PluginDO; import org.apache.shenyu.admin.model.entity.SelectorDO; import org.apache.shenyu.admin.model.event.selector.SelectorCreatedEvent; import org.apache.shenyu.admin.model.event.selector.SelectorUpdatedEvent; import org.apache.shenyu.admin.model.query.SelectorConditionQuery; import org.apache.shenyu.admin.service.converter.SelectorHandleConverterFactor; import org.apache.shenyu.admin.transfer.ConditionTransfer; import org.apache.shenyu.admin.utils.CommonUpstreamUtils; import org.apache.shenyu.admin.utils.SelectorUtil; import org.apache.shenyu.common.concurrent.ShenyuThreadFactory; import org.apache.shenyu.common.constant.Constants; import org.apache.shenyu.common.dto.ConditionData; import org.apache.shenyu.common.dto.SelectorData; import org.apache.shenyu.common.dto.convert.selector.CommonUpstream; import org.apache.shenyu.common.dto.convert.selector.DivideUpstream; import org.apache.shenyu.common.dto.convert.selector.ZombieUpstream; import org.apache.shenyu.common.enums.ConfigGroupEnum; import org.apache.shenyu.common.enums.DataEventTypeEnum; import org.apache.shenyu.common.enums.PluginEnum; import org.apache.shenyu.common.utils.UpstreamCheckUtils; import org.apache.shenyu.register.common.config.ShenyuRegisterCenterConfig; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.context.ApplicationEventPublisher; import org.springframework.context.event.EventListener; import org.springframework.stereotype.Component; import javax.annotation.PreDestroy; import java.util.ArrayList; import java.util.Collections; import java.util.List; import java.util.Map; import java.util.Objects; import java.util.Optional; import java.util.Properties; import java.util.Set; import java.util.concurrent.CopyOnWriteArrayList; import java.util.concurrent.ScheduledFuture; import java.util.concurrent.ScheduledThreadPoolExecutor; import java.util.concurrent.TimeUnit; import java.util.stream.Collectors; /** * This is the upstream check service. */ @Component public class UpstreamCheckService { private static final Logger LOG = LoggerFactory.getLogger(UpstreamCheckService.class); private static final Map<String, List<CommonUpstream>> UPSTREAM_MAP = Maps.newConcurrentMap(); private static final Set<Integer> PENDING_SYNC = Sets.newConcurrentHashSet(); private static final Set<ZombieUpstream> ZOMBIE_SET = Sets.newConcurrentHashSet(); private static final String REGISTER_TYPE_HTTP = "http"; private static int zombieRemovalTimes; private final int zombieCheckTimes; private final int scheduledTime; private final String registerType; private final boolean checked; private final SelectorMapper selectorMapper; private final ApplicationEventPublisher eventPublisher; private final PluginMapper pluginMapper; private final SelectorConditionMapper selectorConditionMapper; private final SelectorHandleConverterFactor converterFactor; private ScheduledThreadPoolExecutor executor; private ScheduledFuture<?> scheduledFuture; /** * Instantiates a new Upstream check service. * * @param selectorMapper the selector mapper * @param eventPublisher the event publisher * @param pluginMapper the plugin mapper * @param selectorConditionMapper the selectorCondition mapper * @param shenyuRegisterCenterConfig the shenyu register center config * @param converterFactor the converter factor */ public UpstreamCheckService(final SelectorMapper selectorMapper, final ApplicationEventPublisher eventPublisher, final PluginMapper pluginMapper, final SelectorConditionMapper selectorConditionMapper, final ShenyuRegisterCenterConfig shenyuRegisterCenterConfig, final SelectorHandleConverterFactor converterFactor) { this.selectorMapper = selectorMapper; this.eventPublisher = eventPublisher; this.pluginMapper = pluginMapper; this.selectorConditionMapper = selectorConditionMapper; this.converterFactor = converterFactor; Properties props = shenyuRegisterCenterConfig.getProps(); this.checked = Boolean.parseBoolean(props.getProperty(Constants.IS_CHECKED, Constants.DEFAULT_CHECK_VALUE)); this.zombieCheckTimes = Integer.parseInt(props.getProperty(Constants.ZOMBIE_CHECK_TIMES, Constants.ZOMBIE_CHECK_TIMES_VALUE)); this.scheduledTime = Integer.parseInt(props.getProperty(Constants.SCHEDULED_TIME, Constants.SCHEDULED_TIME_VALUE)); this.registerType = shenyuRegisterCenterConfig.getRegisterType(); zombieRemovalTimes = Integer.parseInt(props.getProperty(Constants.ZOMBIE_REMOVAL_TIMES, Constants.ZOMBIE_REMOVAL_TIMES_VALUE)); if (REGISTER_TYPE_HTTP.equalsIgnoreCase(registerType)) { setup(); } } /** * Set up. */ public void setup() { if (checked) { this.fetchUpstreamData(); executor = new ScheduledThreadPoolExecutor(1, ShenyuThreadFactory.create("scheduled-upstream-task", false)); scheduledFuture = executor.scheduleWithFixedDelay(this::scheduled, 10, scheduledTime, TimeUnit.SECONDS); } } /** * Close relative resource on container destroy. */ @PreDestroy public void close() { if (checked) { scheduledFuture.cancel(false); executor.shutdown(); } } /** * Remove by key. * * @param selectorId the selector id */ public static void removeByKey(final String selectorId) { UPSTREAM_MAP.remove(selectorId); } /** * Submit. * * @param selectorId the selector id * @param commonUpstream the common upstream * @return whether this module handles */ public boolean submit(final String selectorId, final CommonUpstream commonUpstream) { if (!REGISTER_TYPE_HTTP.equalsIgnoreCase(registerType) || !checked) { return false; } List<CommonUpstream> upstreams = UPSTREAM_MAP.computeIfAbsent(selectorId, k -> new CopyOnWriteArrayList<>()); if (commonUpstream.isStatus()) { Optional<CommonUpstream> exists = upstreams.stream().filter(item -> StringUtils.isNotBlank(item.getUpstreamUrl()) && item.getUpstreamUrl().equals(commonUpstream.getUpstreamUrl())).findFirst(); if (!exists.isPresent()) { upstreams.add(commonUpstream); } else { LOG.info("upstream host {} is exists.", commonUpstream.getUpstreamHost()); } PENDING_SYNC.add(commonUpstream.hashCode()); } else { upstreams.removeIf(item -> item.equals(commonUpstream)); PENDING_SYNC.add(NumberUtils.INTEGER_ZERO); } executor.execute(() -> updateHandler(selectorId, upstreams, upstreams)); return true; } /** * If the health check passes, the service will be added to * the normal service list; if the health check fails, the service * will not be discarded directly and add to the zombie nodes. * * <p>Note: This is to be compatible with older versions of clients * that do not register with the gateway by listening to * {@link org.springframework.context.event.ContextRefreshedEvent}, * which will cause some problems, * check https://github.com/apache/shenyu/issues/3484 for more details. * * @param selectorId the selector id * @param commonUpstream the common upstream * @return whether this module handles */ public boolean checkAndSubmit(final String selectorId, final CommonUpstream commonUpstream) { final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); if (pass) { return submit(selectorId, commonUpstream); } ZOMBIE_SET.add(ZombieUpstream.transform(commonUpstream, zombieCheckTimes, selectorId)); LOG.error("add zombie node, url={}", commonUpstream.getUpstreamUrl()); return true; } /** * Replace. * * @param selectorId the selector name * @param commonUpstreams the common upstream list */ public void replace(final String selectorId, final List<CommonUpstream> commonUpstreams) { if (!REGISTER_TYPE_HTTP.equalsIgnoreCase(registerType)) { return; } UPSTREAM_MAP.put(selectorId, commonUpstreams); } private void scheduled() { try { if (!ZOMBIE_SET.isEmpty()) { ZOMBIE_SET.parallelStream().forEach(this::checkZombie); } if (!UPSTREAM_MAP.isEmpty()) { UPSTREAM_MAP.forEach(this::check); } } catch (Exception e) { LOG.error("upstream scheduled check error -------- ", e); } } private void checkZombie(final ZombieUpstream zombieUpstream) { ZOMBIE_SET.remove(zombieUpstream); String selectorId = zombieUpstream.getSelectorId(); CommonUpstream commonUpstream = zombieUpstream.getCommonUpstream(); final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); if (pass) { commonUpstream.setTimestamp(System.currentTimeMillis()); commonUpstream.setStatus(true); LOG.info("UpstreamCacheManager check zombie upstream success the url: {}, host: {} ", commonUpstream.getUpstreamUrl(), commonUpstream.getUpstreamHost()); List<CommonUpstream> old = ListUtils.unmodifiableList(UPSTREAM_MAP.getOrDefault(selectorId, Collections.emptyList())); this.submit(selectorId, commonUpstream); updateHandler(selectorId, old, UPSTREAM_MAP.get(selectorId)); } else { LOG.error("check zombie upstream the url={} is fail", commonUpstream.getUpstreamUrl()); if (zombieUpstream.getZombieCheckTimes() > NumberUtils.INTEGER_ZERO) { zombieUpstream.setZombieCheckTimes(zombieUpstream.getZombieCheckTimes() - NumberUtils.INTEGER_ONE); ZOMBIE_SET.add(zombieUpstream); } } } private void check(final String selectorId, final List<CommonUpstream> upstreamList) { List<CommonUpstream> successList = Lists.newArrayListWithCapacity(upstreamList.size()); for (CommonUpstream commonUpstream : upstreamList) { final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); if (pass) { if (!commonUpstream.isStatus()) { commonUpstream.setTimestamp(System.currentTimeMillis()); commonUpstream.setStatus(true); LOG.info("UpstreamCacheManager check success the url: {}, host: {} ", commonUpstream.getUpstreamUrl(), commonUpstream.getUpstreamHost()); } successList.add(commonUpstream); } else { commonUpstream.setStatus(false); ZOMBIE_SET.add(ZombieUpstream.transform(commonUpstream, zombieCheckTimes, selectorId)); LOG.error("check the url={} is fail ", commonUpstream.getUpstreamUrl()); } } updateHandler(selectorId, upstreamList, successList); } private void updateHandler(final String selectorId, final List<CommonUpstream> upstreamList, final List<CommonUpstream> successList) { //No node changes, including zombie node resurrection and live node death if (successList.size() == upstreamList.size() && PENDING_SYNC.isEmpty()) { return; } removePendingSync(successList); if (!successList.isEmpty()) { UPSTREAM_MAP.put(selectorId, successList); updateSelectorHandler(selectorId, successList); } else { UPSTREAM_MAP.remove(selectorId); updateSelectorHandler(selectorId, new ArrayList<>()); } } private void removePendingSync(final List<CommonUpstream> successList) { PENDING_SYNC.removeIf(NumberUtils.INTEGER_ZERO::equals); successList.forEach(commonUpstream -> PENDING_SYNC.remove(commonUpstream.hashCode())); } private void updateSelectorHandler(final String selectorId, final List<CommonUpstream> aliveList) { SelectorDO selectorDO = selectorMapper.selectById(selectorId); if (Objects.isNull(selectorDO)) { return; } PluginDO pluginDO = pluginMapper.selectById(selectorDO.getPluginId()); String handler = converterFactor.newInstance(pluginDO.getName()).handler(selectorDO.getHandle(), aliveList); selectorDO.setHandle(handler); selectorMapper.updateSelective(selectorDO); List<ConditionData> conditionDataList = ConditionTransfer.INSTANCE.mapToSelectorDOS( selectorConditionMapper.selectByQuery(new SelectorConditionQuery(selectorDO.getId()))); SelectorData selectorData = SelectorDO.transFrom(selectorDO, pluginDO.getName(), conditionDataList); selectorData.setHandle(handler); // publish change event. eventPublisher.publishEvent(new DataChangedEvent(ConfigGroupEnum.SELECTOR, DataEventTypeEnum.UPDATE, Collections.singletonList(selectorData))); } /** * fetch upstream data from db. */ public void fetchUpstreamData() { final List<PluginDO> pluginDOList = pluginMapper.selectByNames(PluginEnum.getUpstreamNames()); if (CollectionUtils.isEmpty(pluginDOList)) { return; } Map<String, String> pluginMap = pluginDOList.stream().filter(Objects::nonNull) .collect(Collectors.toMap(PluginDO::getId, PluginDO::getName, (value1, value2) -> value1)); final List<SelectorDO> selectorDOList = selectorMapper.findByPluginIds(new ArrayList<>(pluginMap.keySet())); long currentTimeMillis = System.currentTimeMillis(); Optional.ofNullable(selectorDOList).orElseGet(ArrayList::new).stream() .filter(selectorDO -> Objects.nonNull(selectorDO) && StringUtils.isNotEmpty(selectorDO.getHandle())) .forEach(selectorDO -> { String name = pluginMap.get(selectorDO.getPluginId()); List<CommonUpstream> commonUpstreams = converterFactor.newInstance(name).convertUpstream(selectorDO.getHandle()) .stream().filter(upstream -> upstream.isStatus() || upstream.getTimestamp() > currentTimeMillis - TimeUnit.SECONDS.toMillis(zombieRemovalTimes)) .collect(Collectors.toList()); if (CollectionUtils.isNotEmpty(commonUpstreams)) { UPSTREAM_MAP.put(selectorDO.getId(), commonUpstreams); PENDING_SYNC.add(NumberUtils.INTEGER_ZERO); } }); } /** * listen {@link SelectorCreatedEvent} add data permission. * * @param event event */ @EventListener(SelectorCreatedEvent.class) public void onSelectorCreated(final SelectorCreatedEvent event) { final PluginDO plugin = pluginMapper.selectById(event.getSelector().getPluginId()); List<DivideUpstream> existDivideUpstreams = SelectorUtil.buildDivideUpstream(event.getSelector(), plugin.getName()); if (CollectionUtils.isNotEmpty(existDivideUpstreams)) { replace(event.getSelector().getId(), CommonUpstreamUtils.convertCommonUpstreamList(existDivideUpstreams)); } } /** * listen {@link SelectorCreatedEvent} add data permission. * * @param event event */ @EventListener(SelectorUpdatedEvent.class) public void onSelectorUpdated(final SelectorUpdatedEvent event) { final PluginDO plugin = pluginMapper.selectById(event.getSelector().getPluginId()); List<DivideUpstream> existDivideUpstreams = SelectorUtil.buildDivideUpstream(event.getSelector(), plugin.getName()); if (CollectionUtils.isNotEmpty(existDivideUpstreams)) { replace(event.getSelector().getId(), CommonUpstreamUtils.convertCommonUpstreamList(existDivideUpstreams)); } } /** * get the zombie removal time value. * @return zombie removal time value */ public static int getZombieRemovalTimes() { return zombieRemovalTimes; } } ``` 由上源码可知,admin工程启动,UpstreamCheckService注入到spring容器中。接着 ``` public UpstreamCheckService ``` 构造器开始执行。重点关注 ``` if (REGISTER_TYPE_HTTP.equalsIgnoreCase(registerType)) { setup(); } ``` 通过查看yml文件的配置,发现这里的if是可以进来的。接着 ``` public void setup() { if (checked) { this.fetchUpstreamData(); executor = new ScheduledThreadPoolExecutor(1, ShenyuThreadFactory.create("scheduled-upstream-task", false)); scheduledFuture = executor.scheduleWithFixedDelay(this::scheduled, 10, scheduledTime, TimeUnit.SECONDS); } } ``` 这个checked从构造器中获取到时true。(默认是false,yml文件中配置的true)。接着执行如下代码 ``` this.fetchUpstreamData(); ``` ``` public void fetchUpstreamData() { final List<PluginDO> pluginDOList = pluginMapper.selectByNames(PluginEnum.getUpstreamNames()); if (CollectionUtils.isEmpty(pluginDOList)) { return; } Map<String, String> pluginMap = pluginDOList.stream().filter(Objects::nonNull) .collect(Collectors.toMap(PluginDO::getId, PluginDO::getName, (value1, value2) -> value1)); final List<SelectorDO> selectorDOList = selectorMapper.findByPluginIds(new ArrayList<>(pluginMap.keySet())); long currentTimeMillis = System.currentTimeMillis(); Optional.ofNullable(selectorDOList).orElseGet(ArrayList::new).stream() .filter(selectorDO -> Objects.nonNull(selectorDO) && StringUtils.isNotEmpty(selectorDO.getHandle())) .forEach(selectorDO -> { String name = pluginMap.get(selectorDO.getPluginId()); List<CommonUpstream> commonUpstreams = converterFactor.newInstance(name).convertUpstream(selectorDO.getHandle()) .stream().filter(upstream -> upstream.isStatus() || upstream.getTimestamp() > currentTimeMillis - TimeUnit.SECONDS.toMillis(zombieRemovalTimes)) .collect(Collectors.toList()); if (CollectionUtils.isNotEmpty(commonUpstreams)) { UPSTREAM_MAP.put(selectorDO.getId(), commonUpstreams); PENDING_SYNC.add(NumberUtils.INTEGER_ZERO); } }); } ``` 如上代码的大致理解 1>根据插件的名称获取插件列表(查看源码后可知,这里参加upstream的插件只有divide、grpc、tars、springCloud、dubbo) 2>pluginMap中存放pluginId与pluginName 3>根据pluginId获取selectorList 4>循环selectorList,selectorId与commonUpstreams存放到UPSTREAM_MAP中 接着执行如下代码 ``` executor = new ScheduledThreadPoolExecutor(1, ShenyuThreadFactory.create("scheduled-upstream-task", false)); scheduledFuture = executor.scheduleWithFixedDelay(this::scheduled, 10, scheduledTime, TimeUnit.SECONDS); ``` 即启动一个定时器,间隔10s执行一次scheduled,代码如下 ``` private void scheduled() { try { if (!ZOMBIE_SET.isEmpty()) { ZOMBIE_SET.parallelStream().forEach(this::checkZombie); } if (!UPSTREAM_MAP.isEmpty()) { UPSTREAM_MAP.forEach(this::check); } } catch (Exception e) { LOG.error("upstream scheduled check error -------- ", e); } } ``` 很明显ZOMBIE_SET就是空的,UPSTREAM_MAP中是有值的。接着执行如下代码 ``` private void check(final String selectorId, final List<CommonUpstream> upstreamList) { List<CommonUpstream> successList = Lists.newArrayListWithCapacity(upstreamList.size()); for (CommonUpstream commonUpstream : upstreamList) { final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); if (pass) { if (!commonUpstream.isStatus()) { commonUpstream.setTimestamp(System.currentTimeMillis()); commonUpstream.setStatus(true); LOG.info("UpstreamCacheManager check success the url: {}, host: {} ", commonUpstream.getUpstreamUrl(), commonUpstream.getUpstreamHost()); } successList.add(commonUpstream); } else { commonUpstream.setStatus(false); ZOMBIE_SET.add(ZombieUpstream.transform(commonUpstream, zombieCheckTimes, selectorId)); LOG.error("check the url={} is fail ", commonUpstream.getUpstreamUrl()); } } updateHandler(selectorId, upstreamList, successList); } ``` 由如上的check()可知, ``` final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); ``` 点击checkUrl()源码如下 ``` private static boolean isHostConnector(final String host, final int port, final int timeout) { try (Socket socket = new Socket()) { socket.connect(new InetSocketAddress(host, port), timeout); } catch (Exception e) { LOG.error("socket connect is error.", e); return false; } return true; } ``` 主要干了一件事情,socket去连接selector的handler中的ip:port,连接失败则返回 false 。(备注:正常来说,socket的连接是可以成功的,不确定高并发场景下是否会失败),总而言之,连接失败时返回 false。 ``` final boolean pass = UpstreamCheckUtils.checkUrl(commonUpstream.getUpstreamUrl()); if (pass) {...} else { commonUpstream.setStatus(false); ZOMBIE_SET.add(ZombieUpstream.transform(commonUpstream, zombieCheckTimes, selectorId)); LOG.error("check the url={} is fail ", commonUpstream.getUpstreamUrl()); } ``` 当pass = false 时,就会将selector的handler中status改成false,界面上显示close。 自此就完美的解释了selector的handler中status由open变成close的原因。 ## 4.总结问题 如上的步骤可以简化理解 UpstreamCheckService随着bean容器化启动一个定时任务,该定时任务每隔10s钟执行一次,主要干了一件事情,socket.connect(new InetSocketAddress(host, port), timeout);即每隔10s钟去连接一下selector中的url,当连接不上时,则将status设置成false,因此该selector的转发功能失效了,极好的保护了系统。 ## 5.反思问题 当系统初始化后socket.connect连接正常时,哪种情况会导致连接失败呢?高并发么?(目前看来是这样,高并发场景时会导致线程阻塞,不确定会不会导致socket.connect连接失败) ## 6.官网解答 ``` try (Socket socket = new Socket()) { socket.connect(new InetSocketAddress(host, port), timeout); } catch (Exception e) { LOG.error("socket connect is error.", e); return false; } ``` 核心在于这段代码,socket.connect(ip:port),当下游系统不可用了(至于什么场景导致了不可用,情况太多了,高并发算一种),这里检测多次后,就下线这个selector了,则selector中的handler的配置在数据库删除掉了(并不是我之前理解的只清空ip:port)。 自此整个流程都清楚了。

李贤利

2024年1月29日 17:29

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

Word文件

PDF文档

PDF文档(打印)

分享

链接

类型

密码

更新密码

有效期